In this article we see step by step adding Robots Files to your Web Site. Web Spiders are usually called "Robots", and at the moment of accessing a web page, they are called "User-agents".

A Robots file is a suggestion or request made by you to the Web Spider, to not to index certain files or folders in your web site.

It is important to realize that the directives inside the resultant Robots.txt file are purely advisory for the web crawlers.

Adding Robots Files to your Web Site

Before we see step by step how to make a Robots file, take a look at the basics of the Robots syntax with this examples:

all robots can enter all files:

User-agent: *

Allow: /

all robots cannot enter some files:

User-agent:*

Disallow:/file1.aspx

Disallow:/file2.html

all robots cannot enter some directories:

User-agent:*

Disallow:/PrivateDirectory/

some robots cannot enter some directories:

User-agent:SomeRobot

Disallow:/PrivateDirectory/

After this short introduction, let's add a Robots file.

Adding Robots Files to your Web Site - Web Spiders

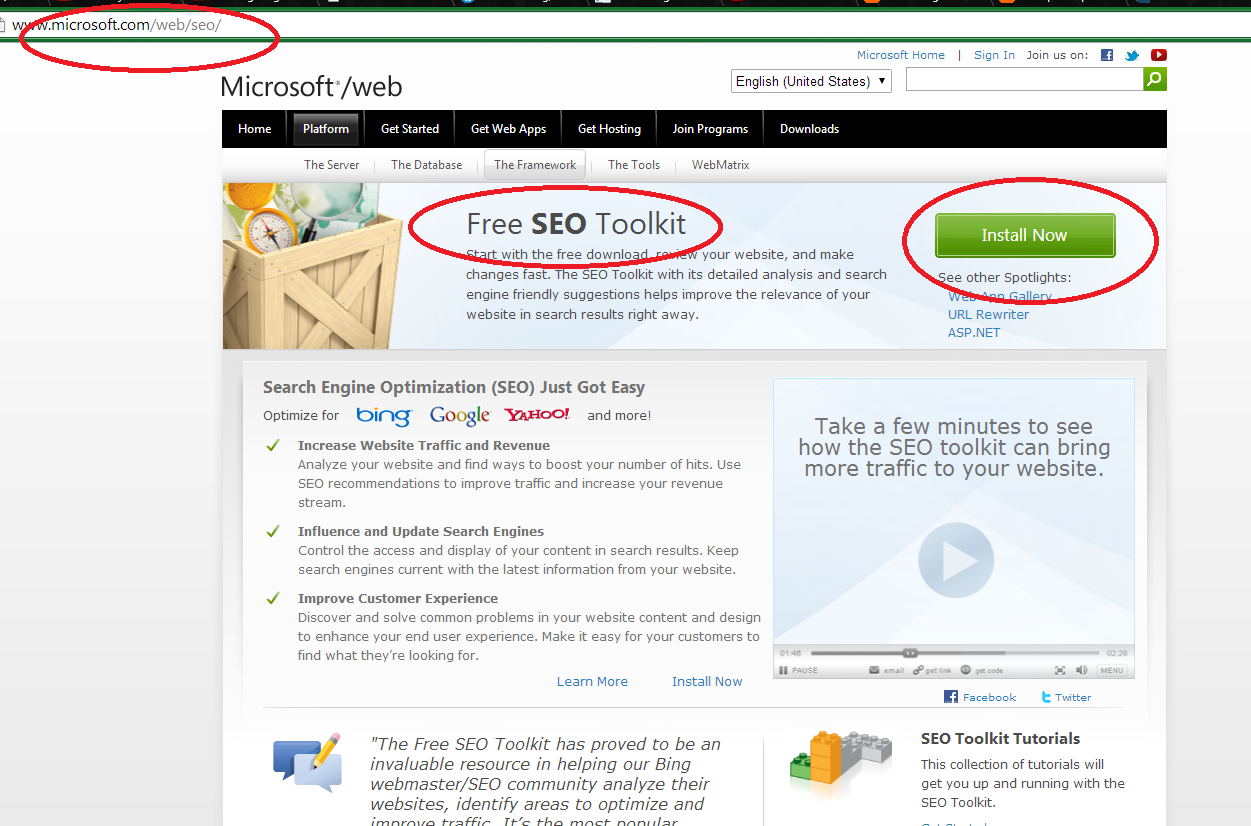

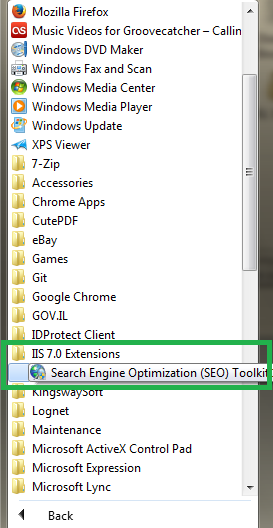

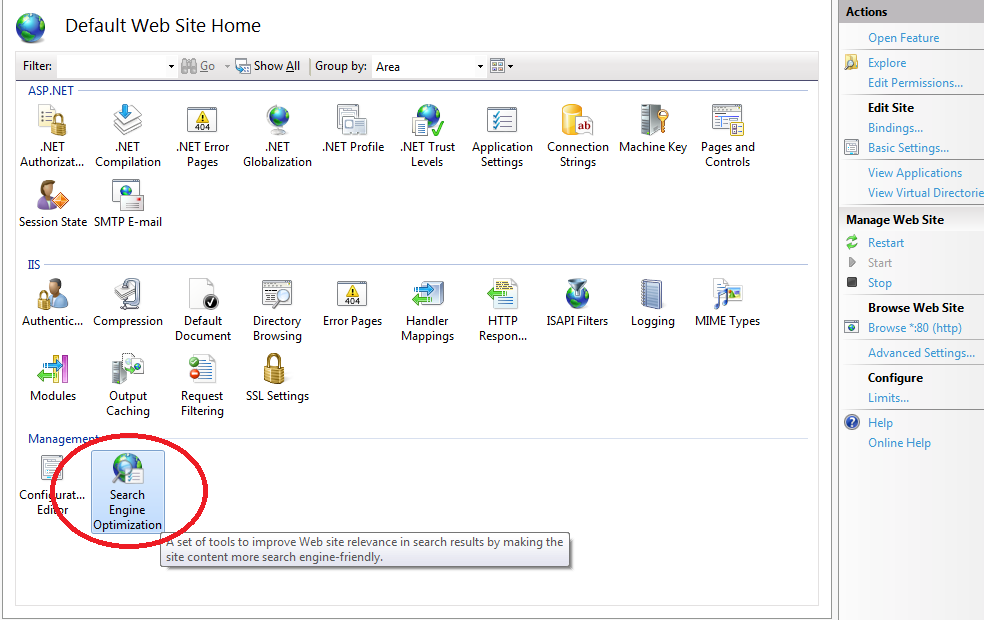

Open the IIS (CTRL-R + "inetmgr") , then open your web site and click on the SEO - Search Engine Optimization:

First you have to add a Sitemap, if you don't have one:

After you created a new Sitemap, click on "Add a new disallow rule":

Here select the URLs of the files and directories that you don't want to index:

For example:

Now let's add the request to index our web site:

Select the whole web site to be indexed:

Now let's see our rules:

As you see, ALL User-agents are ALLOWED to index our site, except for the Disallow rules:

Now let's see the txt file just automatically created:

It looks as this:

That's all!!!! The same way, you could select all files or folders for exclusion from indexers.

You can learn about the free IIS SEO Toolkit , and how to install, analyze and optimize your web site, in this tutorial.

By Carmel Shvartzman

עריכה: כרמל שוורצמן